Wondering how data from multiple sources i.e. Ad exchanges, In-house programmatic campaigns, DSP & SSP, and performance metrics, come together in one holistic actionable report? ETL enables this seamless integration of vast and scattered data.

What IS ETL Tool ? Extract, Transform, Load

ETL (Extract, Transform, Load) is a robust process that allows businesses to harmonize disparate data sources into a structured and organized form ready for analysis. ETL removes the chaos in data dissemination through raw data extraction, transformation into standard formats and loading it into a central system so that teams have what they need to turn vast amounts of potentially overwhelming information into actionable insights.

ETL can be considered as the base of data integration. The first step is pulling data from multiple sources (possibly even some older or legacy systems), and that raw data gets transformed — meaning it gets cleaned up and reformatted. And, it loads the data into a central place (usually a data warehouse or data lake) and keeps them ready for any analytics including routine reporting to advanced machine learning.

This is a crucial component in data analytics and machine learning projects — the ETL pipeline. What if the Data became clean, consistent and driven towards common business goals — visualize how actionable a business report would get. ETL helps organizations:

- Trace relevant data from multiple systems.

- Consistent and reliable data — make sure of data quality

- Find and prepare the data to be analyzed in a form that meets the requirements of business

Why is ETL Important?

Presently, there is an overwhelming amount of information available to organizations from various external environments. Each source incorporates various metrics, ranging from user information stored from website & performance metrics to the analysis of reviews and social media. Nevertheless, with no ETL solutions in place, the question remains how to overcome the issue of disjointed data and turn it into something functional.

For example, a publisher may wish to forecast future demand for their inventory. ETL makes it possible to collect data from ad servers, convert it into the desired form, and load it into the data bank. This way, they would be able to identify trends, manage stocks efficiently, and predict user behavior more accurately. The marketing department, for instance, may want to combine lake retrieved data from the CRM and social media information for customers behavior history – which is what ETL enables by situating the data in a way that allows for easy retrieval and analysis.

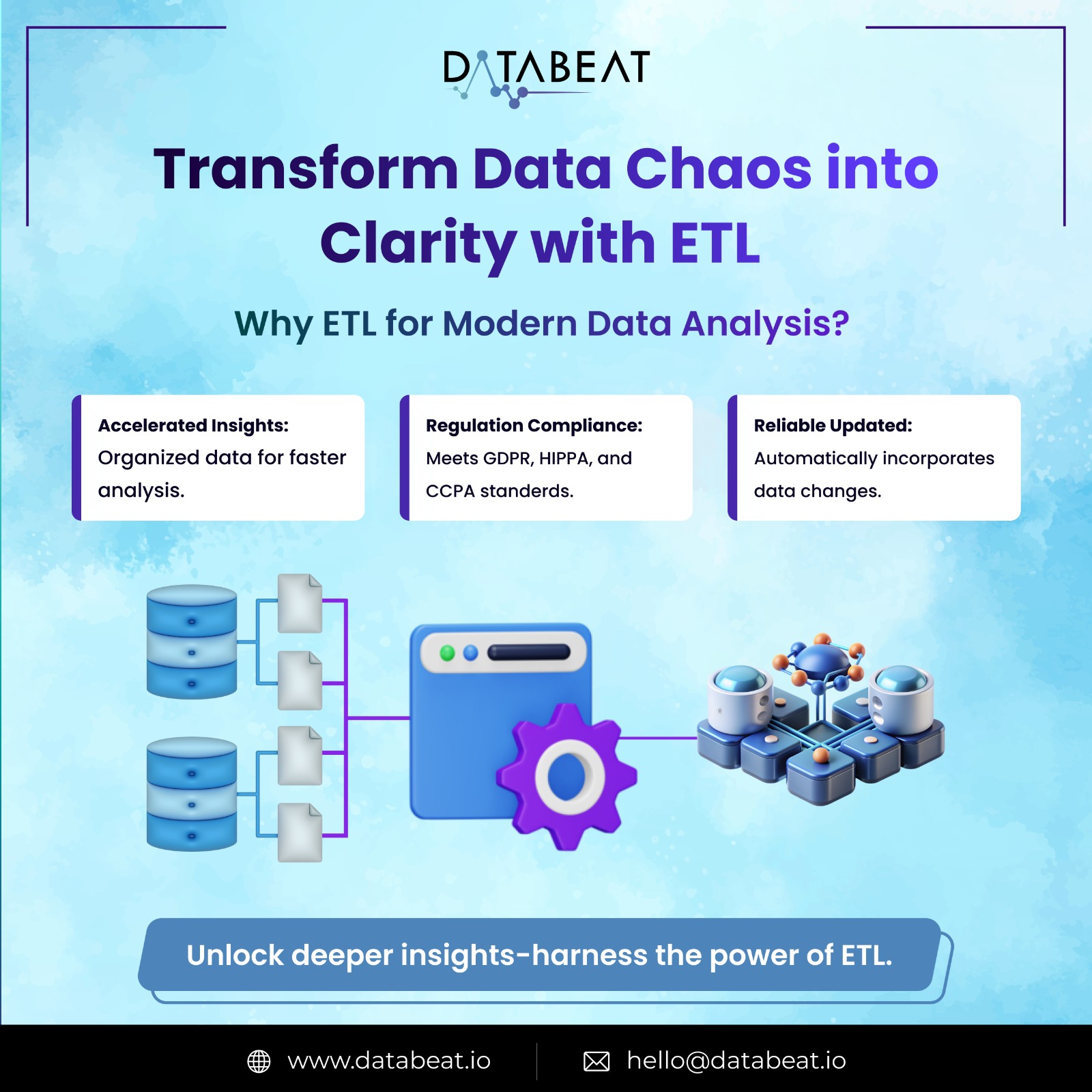

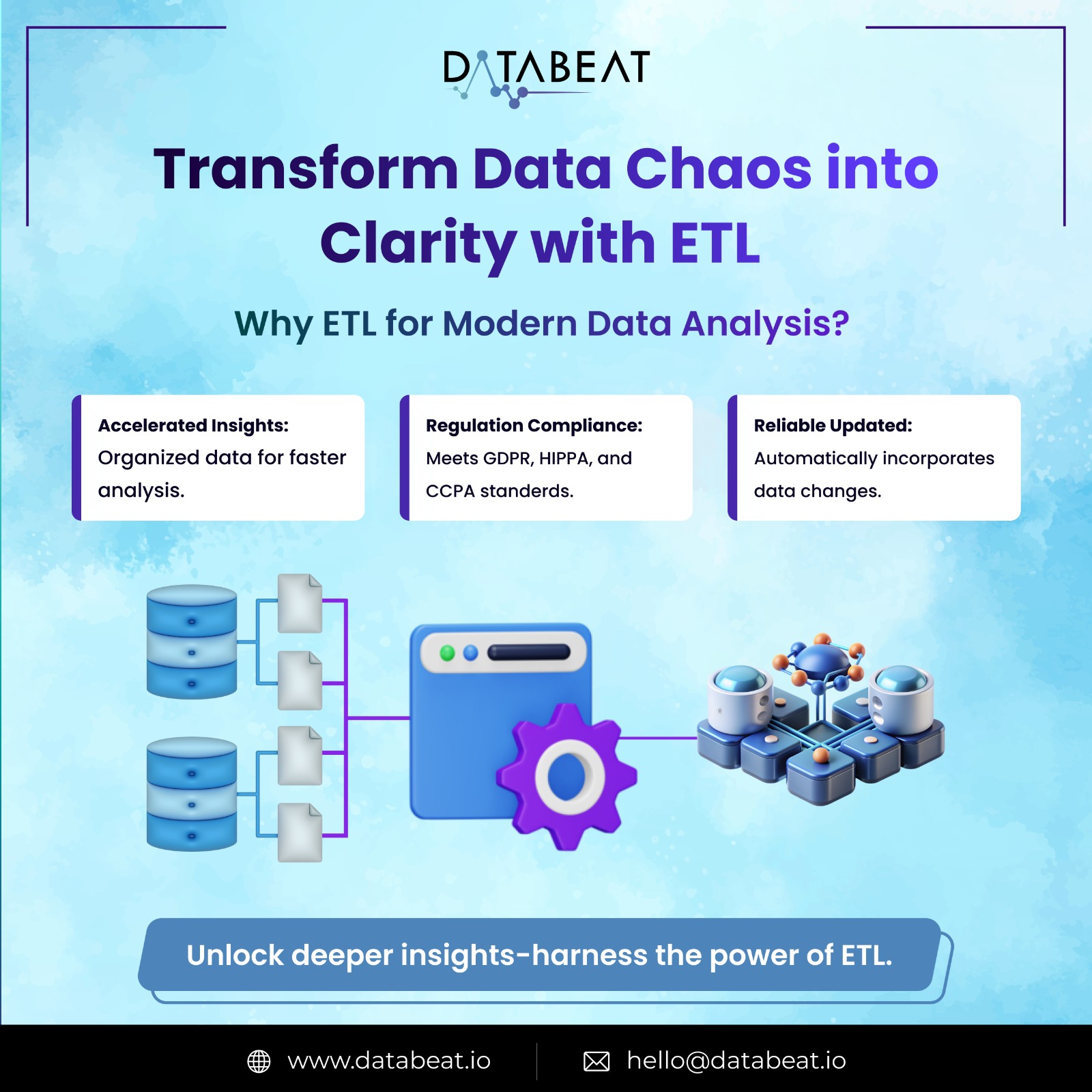

Some of the Biggest Benefits of ETL Include:

- Efficient Data Analysis: In order to align raw data with the target system, ETL pipelines make it easier and faster to analyze. Data is structured and ready for a specific purpose, allowing for quicker insights and decisions.

- Advanced compliance: We can configure ETL to exclude or obscure sensitive information, helping organizations meet data privacy standards such as GDPR, HIPAA and CCPA.

- Reliable change tracking: Using technologies such as change data capture (CDC), ETL pipelines can identify and incorporate database changes, keeping the data repository current and accurate.

Ultimately, ETL enables organizations to build a centralized data warehouse, ‘the single version of truth.’ This guarantees that everyone in the organization has access to the correct information and is sharing updated information, a critical aspect for successful and coordinated operations within the departments.

How does ETL Work?

There is no doubt that ETL is crucial, but let us now take a step back and take a closer look at each of the three stages in detail: Extract, Transform, and Load. This is where the magic of data transformation really happens!

1. Extract: With The Help Of Various Sources, Data Can Be Collected

In the Extract stage, data is obtained from various sources such as SQL or NoSQL databases, CRM and ERP systems, flat files, or web pages. This data is often previously formless and is saved in many different forms. Once extracted, the data is stored in a ‘staging area’ which is just a temporary holding place for extracted data until the next step of the process. Collecting the ingredients before commencing the cooking process is a good analogy.

Data extraction can occur in one or more of the following modes:

- Update Notification: The source system is the one that will send the message when a record has changed.

- Incremental Extraction: Succeeding extractions will include only the changes that were made from the previous one.

- Full Extraction: This mode of extraction is commonly used if the system cannot carry individual records changes, and one time all the data is but records out.

2. Transform: Cleaning and Shaping Data for Analysis

Looking through this in a sequence, as soon as the data is located in the staging area and all validation checks are satisfactory, the Transform phase begins. In simplified words, facts move through multiple steps in this period:

- Data cleaning: Duplicates, format errors and inconsistencies are rectified.

- Aggregation & Deduplication: Duplicate records are detected and deleted, with data being aggregated to form summaries or consolidated views.

- Calculation & Translation: Several data points may require necessary recalibration, such as adjusting figures in terms of currency translation or other measurement units.

- Joining & Splitting: Additional datasets will also be pooled into this one or a number of focal datasets; alternatively, data elements may be separated for further analysis.

Summarization & Encryption: Oversized data files are condensed into relevant and shorter pieces for interpretation, while secured pieces are deleted or encrypted to conform to private data usage policies.

The transformation phase is similar to food preparation in that each ingredient is prepared according to a specified procedure. As a result of this final change, no data is misplaced or inaccurate; rather, it is appropriate and ready for analytical application.

3. Load: Moving Data to the Target System

Now, the last step which is Load can be described as an operation of moving data from the Staging area to the data target, these are usually data warehouses and/or data lakes. This is where the data is going to be stored in order to carry out future analysis, reporting and business intelligence.

And there are several methods of loading data, for instance:

- Full Load: This refers to a situation where every piece of information that is held by the source is moved to the target storage facility, this is mostly used in the initial configuration state.

- Incremental Load: New data that has not been captured by the system or altered data is loaded into the system, usually at preset time periods to ensure the continuity of the system’s relevance.

Most of the time, the loading progress is automated and scheduled to take place during the hibernation periods in order to avoid any negative effects on the system operation.

In a nutshell, the ETL process tackles the issues of data integrity, its correctness and its ability to be used for deep analysis as it is readily available. Similarly, each phase contributes towards the end goal of getting accurate, useful for implementation data insights to be used in making business choices.

Real-World Applications of ETL and Available Tools

Let’s dive into how ETL shines in real-world applications. Each use case shows why ETL isn’t just a technical process but a strategic tool that keeps data-driven decisions on point and timely. Plus, we’ll look at the different ETL tools that can make these transformations happen—so you can see what might be right for your needs.

Key ETL Use Cases

1. Data Migration: Out with the Old, In with the New

Imagine you’ve been storing years of customer and transaction data on an older system, but now it’s time to move to something faster and more robust. ETL steps in, helping to shift this treasure trove of data seamlessly into a modern data warehouse, keeping all the insights intact. It’s like giving your data a new home—without losing any history.

2. Centralized Data Management: Your Data, All in One Place

Many organizations work with data scattered across systems: CRM, marketing platforms, inventory management, you name it. ETL brings it all together into one centralized view. Think of it as your data’s “great unifier,” taking pieces from every corner of your operation and creating a cohesive story that’s easy to read and interpret.

3. Data Enrichment: Adding That Extra Layer of Insight

Ever wish you could take what you know about your customers and make it even richer? ETL can help by enriching data from multiple sources. For example, let’s say you have customer data from your CRM, and you want to combine it with social media feedback—ETL enables you to bring these pieces together, creating a fuller, more detailed customer profile. Now, marketing and customer service teams can get insights they couldn’t see before!

4. Analytics-Ready Data: Streamlined for Insights

Analytics tools love organized, structured data, and ETL is a matchmaker here. By transforming data into consistent formats and structures, ETL gives you clean, organized data that’s ready to jump into your analysis pipeline. So, instead of wasting time wrangling data into shape, your analysts can start answering the big questions right away.

5. Compliance: Keeping Data Privacy in Check

Data privacy isn’t just a nice-to-have; it’s a must-have, especially with regulations like GDPR, HIPAA, and CCPA. ETL supports this by allowing you to scrub, structure, and monitor your data to meet compliance standards. So, while you’re focused on gaining insights, ETL keeps your data in the clear and within legal boundaries.

Picking the Right ETL Tool: What’s Your Perfect Fit?

With different needs come different tools. Here’s a rundown of ETL tools and how they’re built to fit specific tasks.

-

Batch Processing ETL Tools: The Classic Option

Batch processing is tried and true, ideal for handling large amounts of data after-hours when the systems are quieter. Traditional batch tools were constrained to running only during low-traffic times. But today, cloud-based batch tools provide much more flexibility, letting you schedule or run processes at your convenience. If your data processes don’t need real-time speed, this could be a reliable option.

-

Cloud-Native ETL Tools: Built for Today’s Data

If you’re working in the cloud (and who isn’t these days?), cloud-native ETL tools are optimized for you. They handle data extraction, transformation, and loading directly into cloud data warehouses, using the cloud’s power to handle complex transformations at scale. These tools can handle large, diverse datasets, making them a smart choice for teams with big data ambitions.

-

Open-Source ETL Tools: Flexible and Budget-Friendly

Maybe you’re looking for something low-cost, with room for customization. Open-source ETL tools like Apache Kafka offer flexibility, especially if your team has technical expertise. You’ll get the benefit of cost savings and can adapt the tool to fit your unique workflows. Just keep in mind that some open-source tools might only cover parts of ETL or require additional modules for advanced functions.

- Real-Time ETL Tools: Keeping Up with the Speed of Business

If your business relies on the latest, most up-to-date data (think stock trading, real-time ads, or IoT applications), real-time ETL tools are essential. These tools continuously process data as it’s generated, making it possible to take quick action based on fresh insights. Streaming capabilities are the name of the game here, so if that sounds like your need, these tools are worth a closer look.

Introducing DataBeat’s Ingestion: Your ultimate ETL solution:

At DataBeat, we take ETL a step further with Ingestion, our powerful, easy-to-use ETL tool designed for today’s fast-paced data environments. Whether integrating disparate data sources or streamlining one’s workflows to provide seamless real-time analytics, Ingestion makes it all easier. With Ingestion, you will be extracting, transforming, and loading data from any source into your central data warehouse or data lake to stay clean and consistent, ready for analysis.

Unlike other traditional ETL tools, Ingestion is specifically designed to manage complex transformations and larger datasets very efficiently. However, our platform supports ETL and ELT for the user’s convenience in the management of data jobs according to the efficiency of their team.

Using ETL means knowing what data journey you need—and with the right tool, you’ll be able to manage data flows with efficiency, speed, and accuracy. Whether it’s moving data from one place to another, blending different sources, or simply keeping everything in compliance, ETL is there to make it happen.

At DataBeat, we don’t just help you move data — we empower you to transform how your business operates. With our cutting-edge platform, you can effortlessly design, develop, and manage data jobs that seamlessly integrate and process data, using both ETL and ETL methods. Whether you’re tackling complex data migration, consolidating multiple data sources, or ensuring real-time analytics, DataBeat offers a tailor-made solution for your unique needs. Let us help you unlock the full potential of your data, so you can make smarter decisions faster, with confidence. Ready to take your data to the next level? Let’s get started!